Unless you've been living under a rock for the past weekend, you heard about several documents publish by The Guardian and The Washington Post that are (likely) from the NSA explaining how they deal with Tor. I wanted to take a look and analyze them from a technical standpoint.

The good news from these documents is that Tor is doing pretty good. There's a host of quotes that confirm what we hoped: that while there are a lot of techniques the NSA uses to attack Tor, they don't have a complete break. Quotes like "We will never be able to de-anonymize all Tor users all the time" and that they have had "no success de-anonymizing a user in response to a request/on-demand". [0] We shouldn't take these as gospel, but they're a good indicator.

Now something else to underscore in the documents that were released, and in the DNI statement, is that bad people use Tor too. Tor is a technology, no different from Google Earth or guns - it can be used for good or bad. It's not surprising or disappointing to me that the NSA and GCHQ are analyzing Tor.

But from a threat modeling perspective - there's no difference between the NSA/GHCQ, and China or Iran. They're both well-funded adversaries who can operate over the entire Internet and have co-opted national ISPs and inter-network links to monitor traffic. But from my perspective, the NSA is pretty smart, and they have resources unmatched. If they want to target someone, they're going to be able to do so, it's only a matter of putting effort into it. It's impossible to completely secure an entire ecosystem against them. But you can harden it. The documents we've seen say that they have operating concerns[1], and Schneier says the following:

The most valuable exploits are saved for the most important targets. Low-value exploits are run against technically sophisticated targets where the chance of detection is high. TAO maintains a library of exploits, each based on a different vulnerability in a system. Different exploits are authorized against different targets, depending on the value of the target, the target's technical sophistication, the value of the exploit, and other considerations.[4]

What this means to me is that by hardening Tor, we're ensuring that the attacks the NSA does run against it (which no one would be able to stop completely) will only be run against the highest value targets - real bad guys. The more difficult it is to attack, the higher the value of a successful exploit the NSA develops - that means the exploit is conserved until there is a worthwhile enough target. The NSA still goes after bad guys, while all state-funded intelligence agencies have a significantly harder time de-anonymizing or mass-attacking Tor users. That's speculation of course, but I think it makes sense.

That all said - let's talk about some technical details.

Fingerprinting

The NSA says they're interested in fingerprinting Tor users from non-Tor users[1]. They describe techniques that fingerprint the Exit Node -> Webserver connection, and techniques on the User -> Entry Node connection.

They mention that Tor Browser Bundle's buildID is 0, which does not match Firefox's. The buildID is a javascript property - to fingerprint with it, you need to send javascript to the client from them to execute. (TBB's behavior was recently changed, for those trying it at home.) But the NSA sitting on a website wondering if a visitor is from Tor doesn't make any sense. All Tor exit nodes are public. In most cases (unless you chain Tor to a VPN) - the exit IP will be an exit node, and you can fingerprint on that. Changing TBB's buildID to match Firefox may eliminate that one, single fingerprint - but in the majority of cases you don't need that.

What about the other direction - watching a user and seeing if they're connecting to Tor. Well, most of the time, users connect to publicly known Tor nodes. A few percentage of the time, they'll connect to unknown Tor bridges. Those bridges will then connect to known Tor nodes, and the bridges are distinguishable from a user/Tor client because they accept connections. So while a Globally Passive Adversary could enumerate all bridges, the NSA is mostly-but-not-entirely-global. They can enumerate all bridges within their sphere of monitoring, but if they're monitoring a single target outside their sphere, that target may connect to Tor without them being sure it's Tor.

Well, without being sure it's Tor based solely on the source and destination IPs. There are several tricks they note in [2] that let them distinguish the traffic using Deep Packet Inspection. Those include a fixed TLS certificate lifetime, the Issuer and Subject names in the TLS certificate, and the DH modulus. I believe, but am not sure, that some of these have been changed recently in Tor, or are in the process of being redesigned - I need to follow up on that.

Another thing the documents go into length about is "staining" a target so they are distinguishable from all other individuals[3]. This "involves writing a unique marker (or stain) onto a target machine". The most obvious technique would be putting a unique string in the target's browser's User Agent. The string would be visible in HTTP traffic - which matches the description that the stain is visible in "passive capture logs".

However, the User agent has a somewhat high risk of detection. While it's not that common for someone to look at their own User Agent using any of the many free tools out there - I wouldn't consider unusual. Especially if you were concerned with how trackable you were. Also, not to read too closely into a single sentence, but the documents do say that the "stain is visible in passively collected SIGINT and is stamped into every packet". "Every packet" - not just HTTP traffic.

If you wanted to be especially tricky, you could put a marker into something much more subtle - like TCP sequence numbers, IP flags, or IP identification fields. Someone had proffered the idea that a particularly subtle backdoor would be replacing the system-wide Random Number Generator for Windows to DUAL_EC_DRBG using a registry hack.

Something else to note is that the NSA is aware of Obfs Proxy, Tor's obfuscated protocol to avoid nation-state DPI blocking; and also the tool Psiphon. Tor and Psiphon both try to hide as other protocols (SSL and SSH respectively.) According to the leaked documents, they use a seed and verifier protocol that the NSA has analyzed[2]. I'm not terribly familiar with the technical details there, so the notes in the documents may make more sense after I've looked at those implementations.

Agencies Run Nodes

Yup, Intelligence Agencies do run nodes. It's been long suspected, and Tor is explicitly architected to defend against malicious nodes - so this isn't a doomsday breakthrough. Furthermore, the documents even state that they didn't make many operational gains by running them. According to Runa, the NSA never ran exit nodes.

What I said in my @_defcon_ talk is still true: the NSA never ran Tor relays from their own networks, they used Amazon Web Services instead.

— Runa A. Sandvik (@runasand) October 4, 2013

The Tor relays that the NSA ran between 2007 and 2013 were NEVER exit relays (flags given to these relays were fast, running, and valid).

— Runa A. Sandvik (@runasand) October 4, 2013

Correction of https://t.co/U5v7krwZH0: the NSA #Tor relays were only running between 2012-02-22 and 2012-02-28.

— Runa A. Sandvik (@runasand) October 4, 2013

Something I'm going to hit upon in the conclusion is how we shouldn't assume that these documents represent everything the NSA is doing. As pointed out by my partner in a conversation on this - it would be entirely possible for them to slowly run more and more nodes until they were running a sizable percentage of the Tor network. Even though I'm a strident supporter of anonymous and pseudonymous contributions, it's still a worthwhile exercise to measure what percentage of exit, guard, and path probabilities can be attributed to node operators who are known to the community. Nodes like NoiseTor's, or torservers.net are easy, but also nodes whose operators have a public name tied into the web of trust. If we assume the NSA would want to stay as anonymous and deniable as possible in an endeavor to become more than a negligible percentage of the network - tracking those percentages could at least alert us to a shrinking percentage of nodes being run by people 'unlikely-to-be-intelligence-agencies'.

This is especially true because in [0 slide 21] they put forward several experiments they're interested in performing on the nodes that they do run:

- Deploying code to aid with circuit reconstruction

- Packet Timing attacks

- Shaping traffic flows

- deny-degrade/disrupt comms to certain sites

Operational Security

Something the documents hit upon is what they term EPICFAIL - mistakes made by users that 'de-anonymize' them. It's certainly the case that some of the things they mention lead to actionable de-anonymization. Specifically, they mention some cookies persisting between Tor and non-Tor sessions (like Doubleclick, the ubiquitous ad cookie) and using unique identifiers, such as email and web forum names.

If your goal is to use Tor anonymously, those practices are quite poor. But something they probably need to be reminded of is that not everyone uses Tor to be anonymous. Lots of people log into their email accounts and Facebook over Tor - they're not trying to be anonymous. They're trying to prevent snooping and secure their web browsing from corporate proxies, their ISP, national monitoring systems, bypass censorship, or disguise their point of origin.

So - poor OpSec leads to a loss of anonymity. But if you laughed at me because you saw me log into my email account over Tor - you missed the point.

Hidden Services

According to the documents, the NSA had made no significant effort to attack Hidden Services. However, their goals were to distinguish Hidden Services from normal Tor clients and harvest .onion addresses. I have a feeling the latter is going to be considerably easier when you can grep every single packet capture looking for .onion's.

But even though the NSA hadn't focused much on Hidden Services by the time the slides had been made doesn't mean others haven't been. Weinmann, et al. authored an explosive paper this year on Hidden Services, where they are able to enumerate all Hidden Service addresses, measure the popularity of a Hidden Service, and in some cases, de-anonymize the a HS. There isn't a much bigger break against HS than these results - if the NSA hadn't thought of this before Ralf, I bet they kicked themselves when the paper came out.

And what's more - the FBI had two high profile takedowns of Hidden Services - Freedom Hosting and Silk Road. While Silk Road appears to be a result of detective work finding the operator, and then following him to the server, I've not seen an explanation for how the FBI located or exploited Freedom Hosting.

Overall - Hidden Services need a lot of love. They need redesigning, reimplementing, and redeploying. If you're relying on Hidden Services for strong anonymity, that's not the best choice. But whether you are or not - if you're doing something illegal and high-profile enough, you can expect law enforcement to be following up sooner or later.

Timing De-Anonymization

This is another truly clever attack. The technique relies on "[sending] packets back to the client that are detectable by passive accesses to find client IPs for Tor users" using a "Timing Pattern" [0 slide 13]. This doesn't seem like that difficult of an attack - the only wrinkle is that Tor splits and chunks packets into 512-byte cells on the wire.

If you're in control of the webserver the user s contacting (or between the webserver and the exit node) - the way that I'd implement this is by changing the IP packet timing to be extremely uncommon. Imagine sending one 400-byte packet, waiting 5 seconds, sending two 400-byte packets, waiting 5 seconds, sending three 400-byte packets, and so on. What this will look like for the user is receiving one 512-byte Tor cell, then a few seconds later, two 512 byte Tor cell, and so on. While the website load may seem slow - it'd be almost impossible to see this attack in action unless you were performing packet captures and looking for irregular timing. (Another technique might ignore/override the TCP Congestion Window, or something else - there's several ways you could implement this.)

Two other things worth noting about this is that the slides say "GCHQ has research paper and demonstrated capability in the lab". It's possible this attack has graduated out of the lab and is now being run live - this would be concerning because this is potentially an attack that could perform mass de-anonymization on Tor users. It's also extremely difficult to counter. The simplest countermeasures (adding random delays, cover traffic, and padding) can generally be defeated with repeated observations. That said - repeated observations are not always possible in the real world. I think a worthwhile research paper would be to implement some or all of these features, perform the attack, and measure what type of security margins you can gain.

There is also the question "Can we expand to other owned nodes?" These 'owned nodes' may be webservers they've compromised, Tor nodes they control, Quantum servers - it's not clear.

End-to-End Traffic Confirmation

Of all our academic papers and threat modeling - this is the one we may have feared the most. The NSA is probably the closest thing to a Global Passive Adversary we have - they're able to monitor large amounts of the Internet infrastructure, log it, and review it. In an End-to-End Traffic confirmation attack, they lay out the plan of attack in [0 slide 6]: "look for connections to Tor, from the target's suspected country, near time of target's activity". They're performing it in the reverse of how I generally think of it: instead of trying to figure out what this particular user is doing - they see activity on a website, and try and figure out which user is performing it

There's no indication of the specifics of how they perform the query: they say they look for "connections to Tor", but does that mean single-hop directory downloads, circuit creation, initial conversation, or something else? Do they take into account packet timings? Traffic size? All of these things could help refine the attack.

According to the slides, GCHQ has a working version dubbed QUICKANT. The NSA has a version as well that "produced no obvious candidate selectors". The NSA goals were to figure out if QUICKANT was working for GCHQ, and continue testing the NSA's version using profiles of "consistent, random and heavy user" - if we assume they don't like the Oxford comma, that's three profiles of a regular, consistent connection to a server, a random connection, and a heavy Tor users.

How do you frustrate End-to-End Confirmation attacks? Well, the bad news is in a Low Latency Onion Routing network - you don't. Ultimately it's going to be a losing proposition, so most of the time you don't try, and instead focus on other tasks. Just like "Timing De-Anonymization" above (which itself is a form of End-To-End Confirmation), it'd be worth investigating random padding, random delays, and cover traffic to see how much a security margin you can buy.

Cookie De-Anonymization

This is a neat trick I hadn't thought of. They apparently have servers around the Internet that are dubbed "Quantum" servers that perform attacks at critical routing points. One of the things they do with these servers it to perform Man-in-the-Middle attacks on connections. The slides[0] describe an attack dubbed QUANTUMCOOKIE that will detect a request to a specific website, and respond with a redirection to Hotmail or Yahoo or a similar site. The client receives the redirect and will respond with any browser cookies they have for Hotmail or Yahoo. (Slides end, Speculation begins:) The NSA would then hop over to their PRISM interface for Hotmail or Yahoo, query the unique cookie identifier and try and run down the lead.

Now the thing that surprises me the most about this attack is not how clever it is (it's pretty clever though) - it's how risky it is. Let's imagine how it would be implemented. Because they're trying to de-anonymize a user, and because they're hijacking a connection to a specific resource - they don't know what user they're targeting. They just want to de-anonymize anyone who accesses, say, example.com. So already, they're sending this redirection to indiscriminate users who might detect it. Next off - the slides say "We detect the GET request and respond with a redirect to Hotmail and Yahoo!". You can't send a 300-level redirect to two sites, but if I was implementing the attack, I'd want to go for the lowest detection probability. The way I'd implement that is by doing a true Man-in-the-middle and very subtly adding a single element reference to Hotmail, Yahoo, and wherever else. The browser will request that element and send along the cookie. However, two detection points remain: a) if you use a javascript or css element, you risk the target blocking it and being alerted by NoScript and b) if the website uses Content Security Policy, you will need to remove that header also. Both points can be overcome - but the more complicated the attack, the more risky.

Exploitation

Finally - let's talk about the exploits mentioned in the slides.

Initial Clientside Exploitation

Let's first touch on a few obvious points. They mention that their standard exploits don't work against Tor Browser Bundle, and imply this may be because they are Flash-based. [0 slide 16, 1] But as Chrome and other browsers block older Flash versions, and click-to-play becomes more standard, any agency interested in exploitation would need to migrate to 'pure' browser-based exploits, which is what [1] indicates. [1] talks about two pure-Firefox exploits, including one that works on TBB based on FF-10-ESR.

This exploit was apparently a type confusion in E4X that enabled code execution via "the CTypes module" (which may be js-ctypes, but I'm not sure). [1] They mention that they can't distinguish the Operating System, Firefox version, or 32/64 bitness "until [they're] on the box" but that "that's okay" - which seems very strange to me because every attacker I know would just detect all those properties in javascript and send the correct payload. Does the NA have some sort of cross-OS payload that pulls down a correct stager? Seems unlikely, I'll chalk this up to a reading too much into semi-technical bullet points in a PowerPoint deck.

This vulnerability was fixed in FF-ESR-17, but the FBI's recent exploitation of a FF-ESR-17 bug (fixed in a point release most users had not upgraded to) shows that the current version of FF is just as easily exploited. These attacks show the urgency of hardening Firefox and creating a smooth update mechanism. The Tor Project is concerned about automatic updates (as they create a significant amount of liability in that signing key and the possibility of compelled updates) - but I think that could be overcome through multi-signing and distribution of trust and jurisdictions. Automatic updates are critical to deploy

Hardening Firefox is also critical. If anyone writes XML in javascript I don't think I want to visit their website anyway. This isn't an exhaustive list, but some of the things I'd look at for hardening Firefox would be:

- Sandboxing - a broad category, but Chrome's sandboxing model makes exploitation significantly more difficult.

- The JIT Compiler

- All third party dependencies - review what they're used for, what percentage of the imported library is actually used, what it's security history is, and if they can be shrunk, removed, or disabled. Just getting an inventory of these with descriptions and explanations of use will help guide security decisions.

- Obscure and little-used features - especially in media and CSS parsing. See if these features can be disabled in Tor Browser Bundle to reduce the attack surface, or blocked until whitelisted by NoScript. The E4X feature is a fantastic example of this. Little-used Web Codecs would be another.

- Alternate scheme support - looking at about:config with the filter "network.protocol-handler" it looks like there are active protocol handlers for snews, nntp, news, ms-windows-store (wtf?), and mailto. I think those first four can probably be disabled for 99% of users.

For what it's worth, [2 slide 46] mentions that Tails (the Tor live-DVD distribution) adds severe exploitation annoyances. Tails would prevent an attacker from remaining persistent on the machine.

Random Musings on Operations

There's a strange slide with some internal references in one of the presentations. It took me a couple read-throughs, but then something clicked as a possible explanation. This is speculation, but judge for yourself if you think it makes sense.

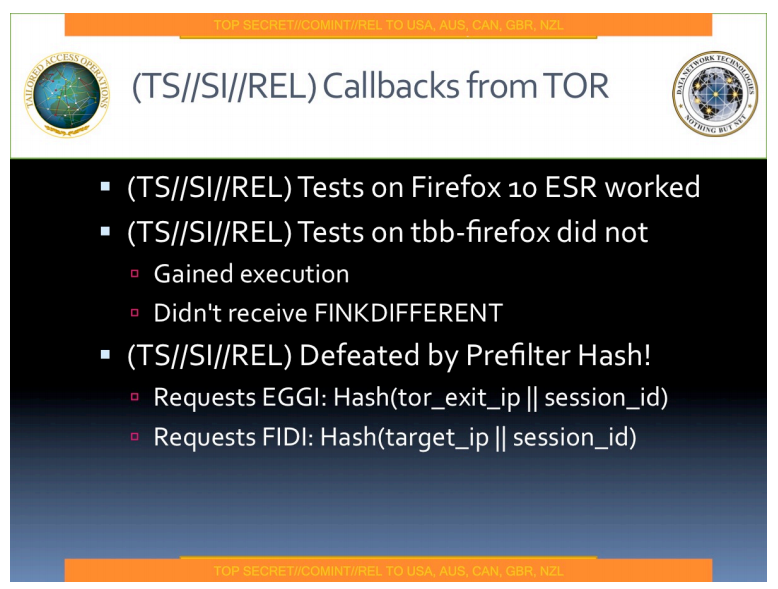

Apparently they tested their callback mechanism (the piece of code that will phone home to their server) and while it worked on normal Firefox, it didn't work on Tor Browser Bundle. It "gained execution" but "didn't receive FINKDIFFERENT", and that it was "defeated by prefilter hash".

When running a clientside exploitation test for a client, we'll limit our exploits from being served to random people outside their corporate network, usually using IP address filtering. If you're not in the netblock we expect - you don't get the exploit. For us, this is to avoid hacking anyone who hasn't asked for it - for the NSA it's (probably) to limit the chance of detection, and (hopefully) for legal reasons.

If their generic exploitation engine (which Schneier says is called FOXACID[4]) was built in a similar way, they would load the target's IP address into it, and have a mechanism that blocks exploitation unless the payload was accessed by that IP address. Tor would break that - there's the target IP address (which they may read from the machine), and there's the exit node's IP address which is likely read by the server sending the exploit.

I don't know what EGGI or FIDI are, but that's my interpretation of this slide.

Further Clientside Exploitation

There is also a section on dirty tricks that can be played if you've gained access to the user's machine. [0 slide 17] mentions "given CNE access to a target computer" - I interpret that to mean "given Computer Network Exploitation access" - that is "if we're controlling their box already, but we don't want to do something that may alert the user (like copying all their traffic to our IP), how can we subtly backdoor them". This is pretty far outside Tor's threat model - if you're running an application as the same user or as root/Administrator - that's not much a desktop operating system does to prevent one application (like a rootkit) from manipulating a second application (like Tor). But it's definitely worth examining their ideas:

- Alter path selection to use exit nodes they control or monitor closely - this could be done by modifying or replacing the Tor binaries on disk, or while it is running in memory

- Slide them onto an entire other Tor network - also possible to do on disk or in memory, all you'd need to do would be replace the hardcoded directory servers and signing keys

- Stain their user agent or traffic so they can identify it on the way out (as mentioned above, they say they can do this already)

- Have the computer call out and let them know where it is (which is what the FBI did in the Freedom Hosting situation)

Web Server Exploitation

Again, given a presence on the webserver (they control or have exploited it), they talk about sending the traffic out of the webserver in such a way that the Tor traffic going to the client is distinguishable. ([0 slide 18] and the Timing De-Anonymization section above)

Mass Node Exploitation

The fairly popular theory of "I bet the NSA has just exploited all the Tor nodes" seems to be partially debunked in [0 slide 19]. They explain "Probably not. Legal and technical challenges."

Tor Disruption

A popular topic in the slides is disrupting and denying access to Tor. My theory is that if they can make Tor suck for their targets, their targets are likely to give up on using Tor and use a mechanism that's easier to surveil and exploit. [0 slide 20] talks about degrading access to a web server they control if it's accessed through Tor. It also mentions controlling an entire network and deny/degrade/disrupt the Tor experience on it.

Wide scale network disruption is put forward on [0 slide 22]. One specific technique they mention is advertising high bandwidth, but actually perform very slowly. This is a similar trick to one used by [Weinmann13], so the Tor project is both monitoring of wide scale disruption of this type and combatting it through design considerations.

Conclusion

The concluding slide of [0] mentions "Tor Stinks... But It Could be Worse". A "Critical mass of targets do use Tor. Scaring them away may from Tor might be counterproductive".

Tor is technology, and can be used for good or bad - just like everything else. Helping people commit crimes is something no one wants to do, but everyone does - whether it's by just unknowingly giving a fugitive directions or selling someone a gun they use to commit a crime. Tor is a powerful force for good in the world - it helps drive change in repressive regimes, helps law enforcement (no, really), and helps scores of people protect themselves online. It's kind of naive and selfish, but I hope the bad guys are scared away, while we make Tor more secure for everyone else.

Something that's worth noting is that this is a lot of analysis and speculation off some slide decks that have uncertain provenance (I don't think they're lying, but they may not tell the whole truth), uncertain dates of authorship, and may be omitting more sensitively classified information. This a definite peek at a playbook - but it's not necessarily the whole playbook. We should keep that in mind and not base all of our actions off these particular slide decks. But it's a good place to start and a good opportunity to reevaluate our progress.

[0] http://media.encrypted.cc/files/nsa/tor-stinks.pdf [1] http://media.encrypted.cc/files/nsa/egotisticalgiraffe-wapo.pdf [1.5] http://media.encrypted.cc/files/nsa/egotisticalgiraffe-guardian.pdf [2] http://media.encrypted.cc/files/nsa/advanced-os-multi-hop.pdf [3] http://media.encrypted.cc/files/nsa/mullenize-28redacted-29.pdf [4] http://www.theguardian.com/world/2013/oct/04/tor-attacks-nsa-users-online-anonymity [Weinmann13] http://www.ieee-security.org/TC/SP2013/papers/4977a080.pdf

required, hidden, gravatared

required, markdown enabled (help)

* item 2

* item 3

are treated like code:

if 1 * 2 < 3:

print "hello, world!"

are treated like code: